Extracting URLs (faster) with Python

The recommended approach to do any HTML parsing with Python is to use BeautifulSoup. It's a great library, easy to use but at the same time a bit slow when processing a lot of documents. In this blog post, I would like to highlight some alternative ways on how to extract URLs from HTML documents without using BeautifulSoup. I added a performance test at the end to compare each alternative.

BeautifulSoup

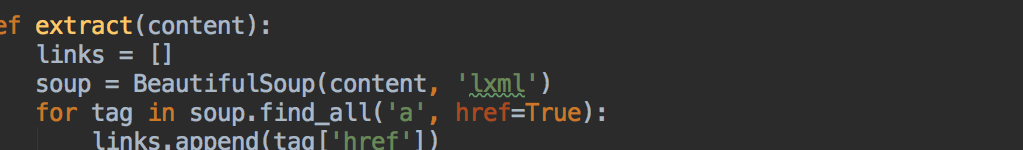

First of, below is the source code to extracts links using BeautifulSoup. We will use LXML as the parser implementation for BeautifulSoup because according to the documentation it's the fastest. The code uses the find_all functions with a a tag filter to only retrieve the URLs.

import bs

def extract(content):

links = []

soup = BeautifulSoup(content, 'lxml')

for tag in soup.find_all('a', href=True):

links.append(tag['href'])

return links

Just for the performance test, I added a slightly modified code below which doesn't use the a tag filter. Will there be any difference in execution time?

import bs

def extract(content):

links = []

soup = BeautifulSoup(content, 'lxml')

for tag in soup.find_all():

if tag.name == 'a' and 'href' in tag.attrs:

links.append(tag.attrs['href'])

return links

LXML

One of the underlying parsers used by BeautifulSoup is LXML. While BeautifulSoup provides a lot of convenient functions on top of it, you can use LXML directly. Specifically for our URL extraction case, the code isn't even complicated but strips away all the overhead.

import lxml.html

def extract(content):

links = []

dom = lxml.html.fromstring(content)

for link in dom.xpath('//a/@href'):

links.append(link)

return links

HTMLParser

The Python framework has an HTML parser built-in, and the following snippet uses it to extract URLs. It's a bit more complicated because we need to define our own HTMLParser class.

Btw. by default BeautifulSoup uses the Python parser instead of LXML as the underlying parser. This is great in case you need a Python-only implementation.

from HTMLParser import HTMLParser

class URLHtmlParser(HTMLParser):

links = []

def handle_starttag(self, tag, attrs):

if tag != 'a':

return

for attr in attrs:

if 'href' in attr[0]:

self.links.append(attr[1])

break

def extract(content):

parser = URLHtmlParser()

parser.feed(content)

return parser.links

Selectolax

During my research I found Selectolax. It's a super fast HTML parser. Under the hood, it uses the Modest engine to do the parsing.

from selectolax.parser import HTMLParser

def extract(content):

links = []

dom = HTMLParser(content)

for tag in dom.tags('a'):

attrs = tag.attributes:

if 'href' in attrs:

links.append(attrs['href'])

return links

Regular Expression

As a final alternative, the following code snippet uses a regular expression to parse HTML tags. Because it doesn't parse the actual HTML DOM it won't be a fit for every use case - especially when the document is malformed. At the same time, this can be a big plus because it will use less memory and regular expressions are very fast. In any case - use with caution.

import re

HTML_TAG_REGEX = re.compile(r'<a[^<>]+?href=([\'\"])(.*?)\1', re.IGNORECASE)

def extract(content):

return [match[1] for match in HTML_TAG_REGEX.findall(content)]

Performance Results

For the performance test, I downloaded the HMTL Wikipedia page which is around 353KB big and contains 1,839 links at the time of writing this article. I did run each extraction method 1,000 times on this file and used the average runtime for the result.

| per Iteration | |

|---|---|

| BeautifulSoup | 373.25ms |

| BeautifulSoup Alt | 260.44ms |

| LXML | 43.02ms |

| Python (build-in) | 155.23ms |

| Selectolax | 17.58ms |

| Regex | 6.01ms |

I didn't expect the differences in execution time to be so big for each method. It matters a great deal which of them you use. Interestingly doing the manual filtering with BeautifulSoup is faster than using the a tag filter, something I wouldn't have expected. While the Regex implementation is the fastest, Selectolax is not far off and provides a complete DOM parser. If you don't want to use a Regex or Selectolax then LXML by itself can still offer decent performance.